Your Google Assistant can connect families to the North Pole

Google announced yesterday in their Canadian blog the introduction of new Google Assistant experiences for families. The announcement clearly focuses Google Assistant in a light of family-related activities, especially with kids.

Today, we’re introducing new experiences, designed specifically for families with kids so they can learn, play and imagine together.

Among the new experiences, Google says families “will be able to join The Wiggles,” from the Treehouse TV show and go on a “custom Wiggles experience” commissioned by Google exclusively for Assistant.

News for kids

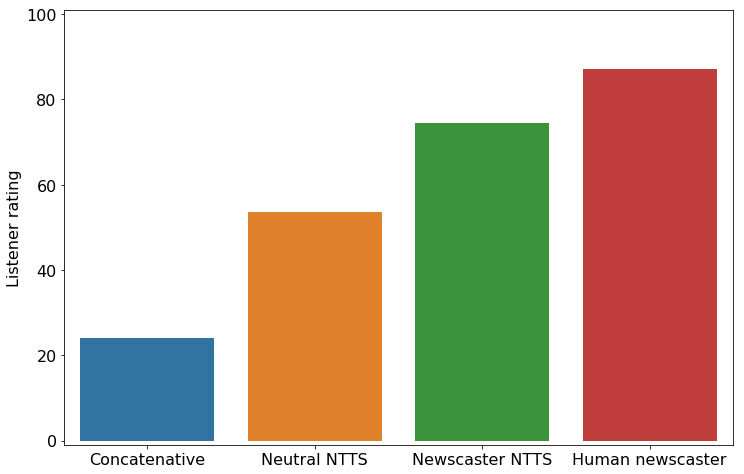

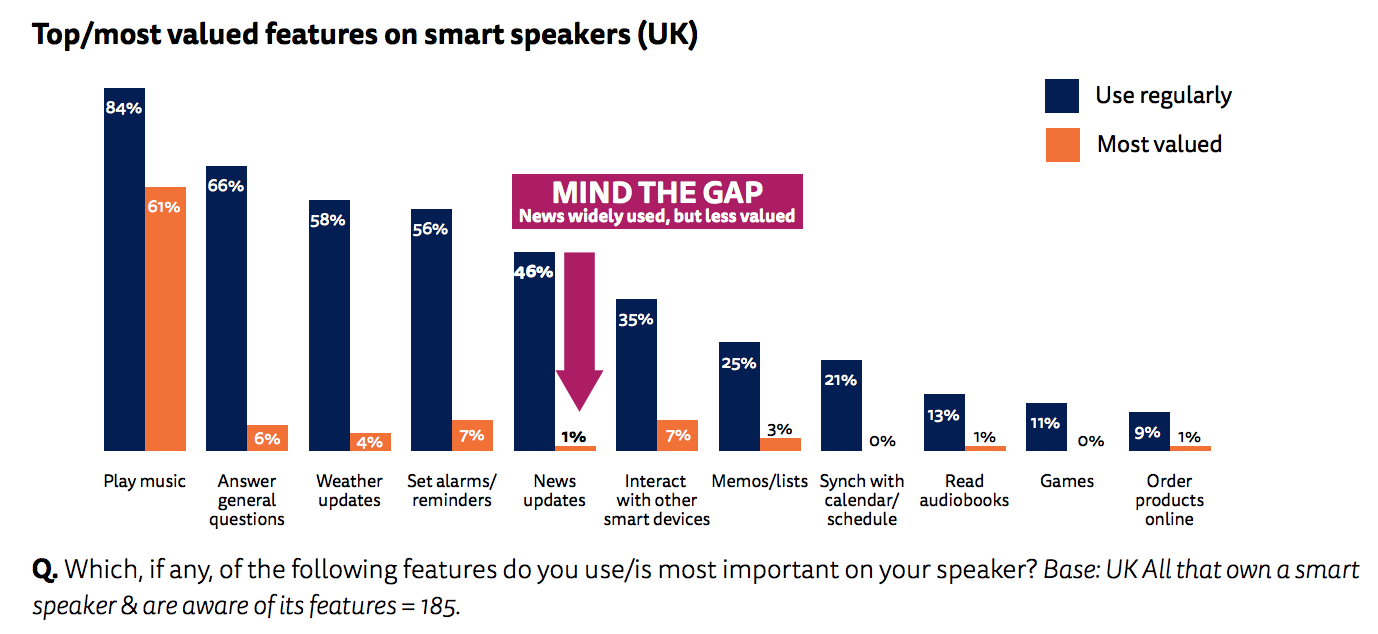

Google is also introducing news stories appealing to kids through CBC Kids News. It can be invoked by “Hey Google, Play CBC Kids News“. The feature is focused on daily, local, national and international stories that are relevant to Canadian kids with a focus on media literacy. Google Assistant recently launched storytime experiences in partnership with Disney. Along with these new services for Canadian kids, the search giant is shifting a lot of its focus to kids and family environment. Moreover, Reuters also released a report in which one of the main conclusions is that users don’t love news in smart speakers. By shifting to kids consuming news in Google Assistant, the company is setting news consumption in their smart speaker for the future. Smart.

Test listening and comprehension

Another feature the blog post announced is Boukili Audio, an interactive activity that tests listening and comprehension of stories on animals, nutrition, music, travels and a ton of other captivating subjects, all in French. After listening to stories, Boukili Audio puts your child’s skills to the test with a series of multiple choice questions to evaluate their French language comprehension and their memory, all while having fun. Boukili Audio has over 120 interactive books, over 70 of which are exclusive to the Assistant. Available in French only. To Try it out Canadian users can say: “Ok Google, Parler avec Boukili Audio”.

Call Santa

Just in time for Christmas, Google is announcing calls to Santa: “Hey Google, call Santa”. It’s only available in English. Can’t wait for this to be available in the US to see what Santa has to say.

Personalized Google Assistant experience for children under 13

With their parent’s permission, children under 13 can also have their own personalized Google Assistant experience when they log in with their own account, powered by Family Link (Not available in Quebec). Family Link helps parents manage their child’s Google Account while they explore. And with Voice Match, your family can train the Assistant to recognize up to six voices.

Final thoughts

The announcement was in the Google Canadian blog yesterday Wednesday, November 28. It doesn’t specify when the family experiences are going to be available in other territories. Google focus on families and kids in the holidays is very smart. It proves the company is looking ahead and it’s not as concerned to “fix” current users’ view. After all, Generation V is the real protagonists in voice technology.

List of additional commands for family experiences:

- “Play Lucky Trivia for Families”

- “Play Musical Chairs”

- “Play Freeze Dance”

- “Let’s play a game”

Learn 📖

- “Tell me a story”

- “Help me with my homework”

- “Tell me a fun fact”

- “How do you say “Let’s have crêpes for breakfast” in French?”

- “What does a whale sound like?” (try other animals!)

- “What does a guitar sound like?” (try other instruments!)

Entertain 🌟

- “Give me a random challenge”

- “Sing a song for me”

- “Give me a tongue twister”

- “Tell me a joke”

Perfect for the Holidays 🎄

- “Tell me a Santa joke”

- “What’s your favorite Christmas cookie?”

- “Tell me a holiday fact”