Global web index released last week a report on Voice Search: trends to know, a deep dive into the consumer uptake of the voice assistant technology. I summed up the main points.

The report tackles 3 fundamentals:

- Growth prospects for voice

- The bigger picture of voice

- What are the implications for consumer privacy

Here are the key takeaways in each of them:

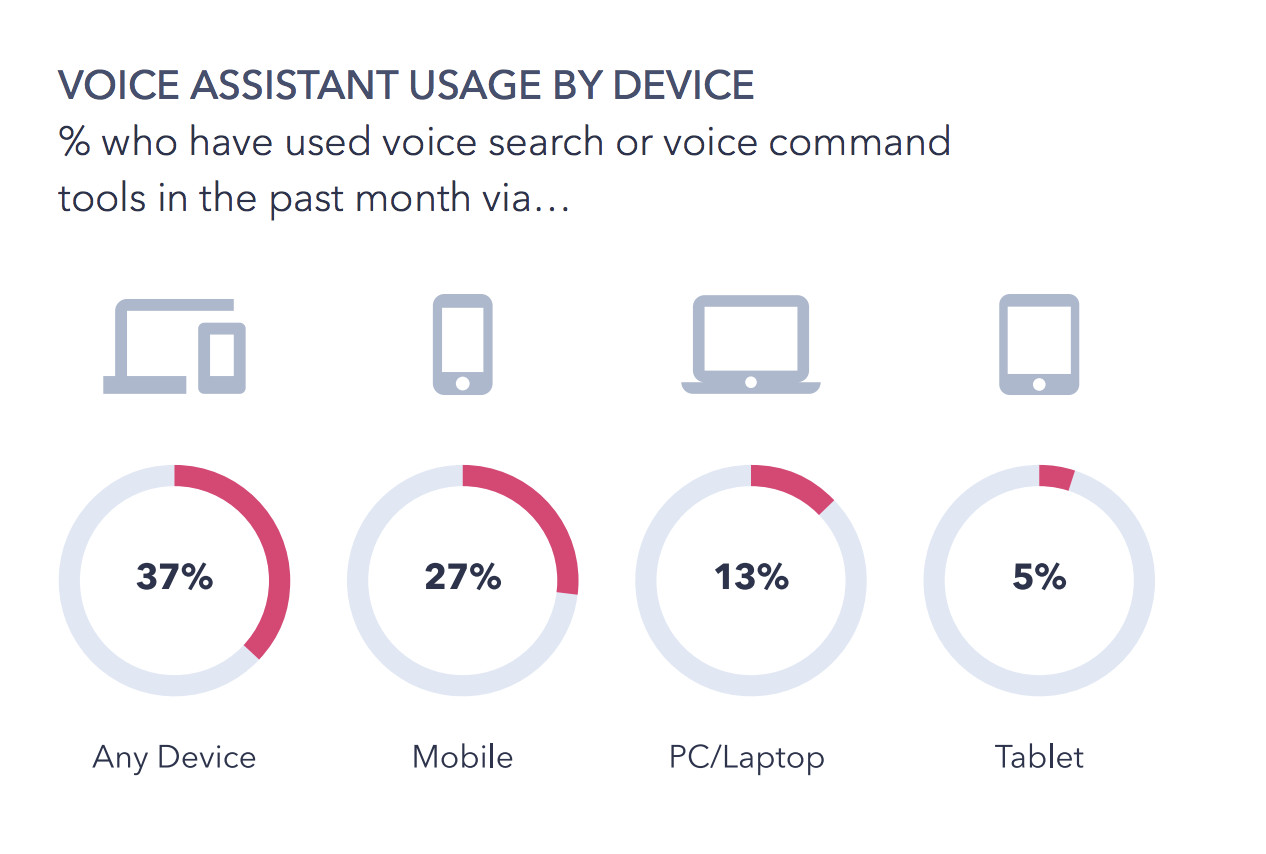

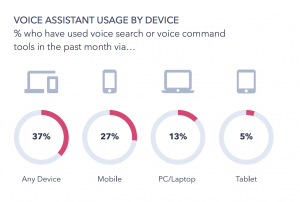

- 27% of the global online population is using voice search on mobile. It’s most popular in Asia Pacific, but 16-24s in mature English-speaking markets aren’t far behind.

- Mobile voice has the strongest ability to scale voice tech adoption at a continued rapid pace. Between 40 to 60% of consumers planning to purchase a new mobile or upgrade their existing handset in the next 12 months, the majority of their new phones will have integrated voice assistants.

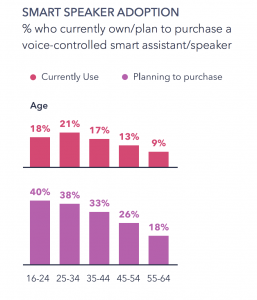

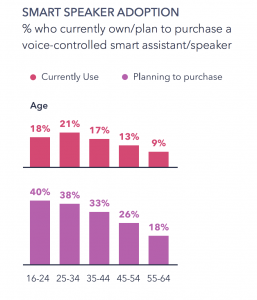

- 17% of internet users currently own a voice controlled smart assistant, and 34% said they are interested in purchasing one.

- Privacy is a major factor for voice tech. The report establish unfamiliarity among consumers with how their data is stored and how they may access this data as the main concern. Advise to brands to be transparent with consumers over data collection in voice tech.

Growth prospects for voice

Consumers have a wide range of voice-powered search services at their disposal. From Siri to Cortana to Google Assistant to Baidu DuerOS. Voice enabled smart speakers and voice assistants on mobile are the primary interfaces consumer use to engage with voice tech.

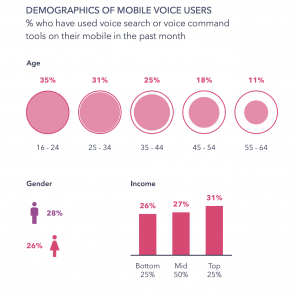

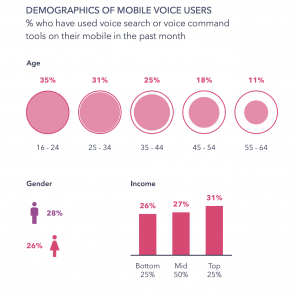

According to the report, the demographics of mobile voice users by percent who used voice search or voice command tools on their mobile in the past month is:

By gender:

Ages 16 – 34 a combined 66%.

The important trend in the chart is how mobile voice is driven by younger internet users. More than 2 in 3 Mobile Voice Users fall within the 16-34 age bracket, giving us a clear indication of the trajectory of growth in the mobile voice market.

From a market-by-market perspective, the Global web index data shows that mobile voice search is being driven by Asian markets, with the strongest figures by Indonesia (38%), China (36%) and India (34%).

One of the biggest obstacles smart speakers have faced is in convincing consumers that they are an essential rather than a nice-to-have device.

This is in part being erased by third party applications for consumers, giving brands the power to engage or sell in a convenient way. Expedia is the most recent example that I featured here a few episodes back.

Similar to mobile voice, ownership and intent to purchase is concentrated among younger age groups, but it’s still a significant number of older consumers who say that they plan to purchase one of these items in the future. Clearly, there is a widespread awareness of how these devices can bring value into everyday activities which spans across age and income groups. A key factor in increasing this awareness has been aggressive promotional and discount periods during holiday seasons – especially from the likes of Amazon – in ensuring that these devices are available even to the more modest budget.

Another key takeaway from the report is the prolonged interest in smart speakers as they approach their fourth year on the market providing a promising outlook in the longevity of consumer uses cases of these devices.

The Consumer Privacy factor

The final section of the report outlines the consumer privacy based on the user skepticism that they are being recorded all the time. Concluding that:

The balance between convenience, privacy and security for new technologies like voice search often rests upon brands being transparent with their customers.

Future implications

Global web index outlines social, transparency and affordability as the main implications for the future of voice tech. For the consumer research company Amazon is clearly ahead of the competition and that should serve as a warning for both new and traditional competitors.