It’s time small businesses had big business capability. The AI for small business

The voice community is amazing to watch as it evolves. I have been very fortunate to meet lots of people who are making an impact on voice technology and conversational interfaces. This episode is special because it features one of those startups impacting voice tech every day, and it is the first time VoiceFirst Weekly show welcomes a guest. We are very thrilled about how it turned out.

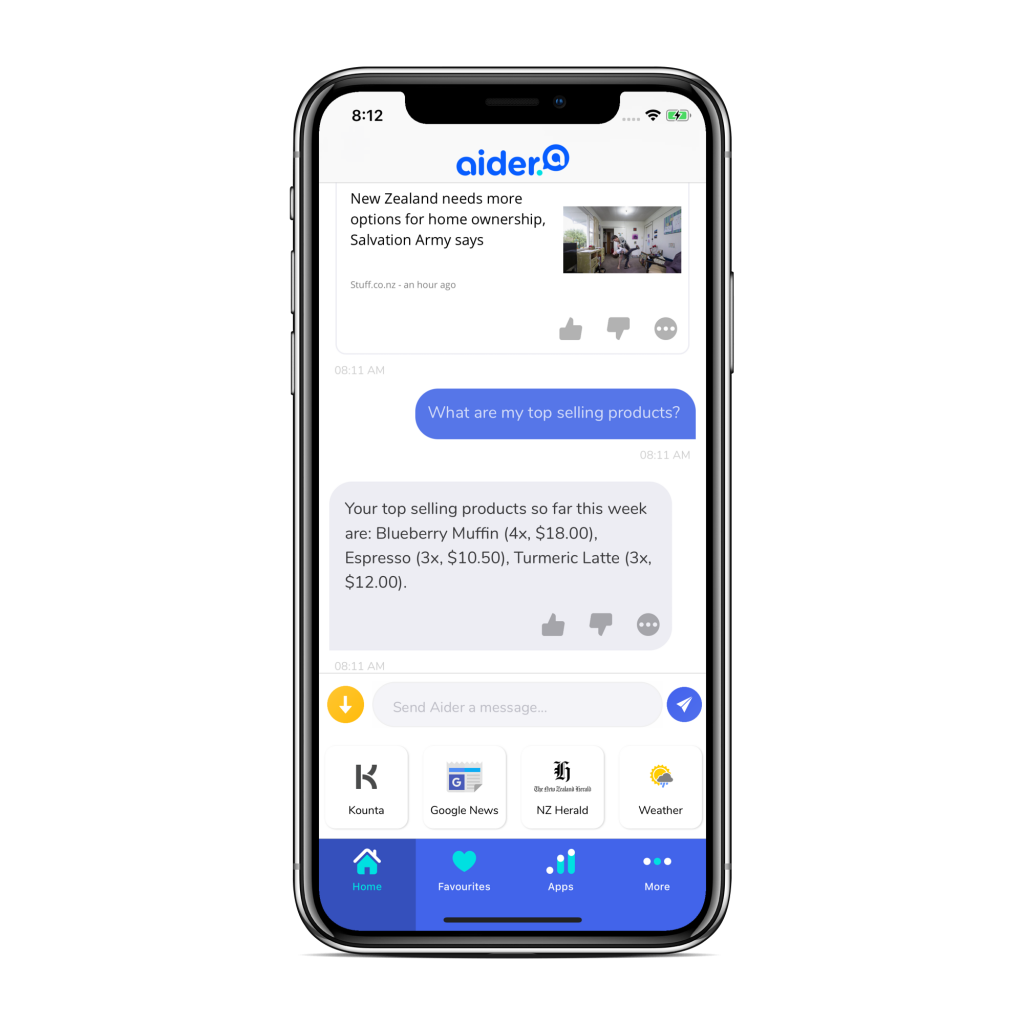

In this episode, I talk to Brendan Roberts, CEO of Aider, The AI assistant for small businesses. Aider is launching now in Australia and New Zealand, with plans for the US in 2019. Aider will help you answer questions like: What’s my top selling product? What’s my revenue today? Who is meant to be working tomorrow and what’s the weather going to be like? All this from your phone bringing your business context into account.

I met Brendan at the Voice Summit back in July after arranging a meet up of folks at the conference from the Voice Entrepreneur Community on Facebook. I got to see Aider first hand and was really impressed by its capabilities. Aider integrates with several SaaS apps that small business users might be familiar with for sales, accounting and client management providing insights and learning from the user’s actions. What I thought was really impressive for an app this type was the ability to keep the conversation across conversational channels through voice or messaging.

Without further ado, please enjoy my talk with Brendan.

You can contact Brendan on Twitter or LinkedIn . You can also try Aider and sign up for Aider beta access.